tick.robust.RobustLinearRegression¶

-

class

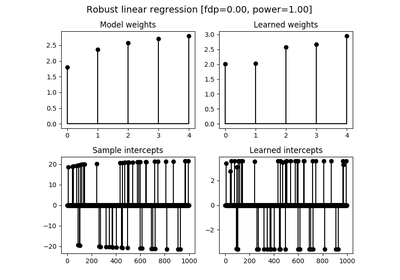

tick.robust.RobustLinearRegression(C_sample_intercepts, C=1000.0, fdr=0.05, penalty='l2', fit_intercept=True, refit=False, solver='agd', warm_start=False, step=None, tol=1e-07, max_iter=200, verbose=True, print_every=10, record_every=10, elastic_net_ratio=0.95, slope_fdr=0.05)[source]¶ Robust linear regression learner. This is linear regression with sample intercepts (one for each sample). An ordered-L1 penalization is used on the individual intercepts, in order to obtain a sparse vector of individual intercepts, with a (theoretically) guaranteed False Discovery Rate control (see

fdrbelow).The features matrix should contain only continuous features, and columns should be normalized. Note that C_sample_intercepts is a sensitive parameter, that should be tuned in theory as n_samples / noise_level, where noise_level can be chosen as a robust estimation of the standard deviation, using for instance

tick.hawkes.inference.std_madandtick.hawkes.inference.std_iqron the array of labels.- Parameters

C_sample_intercepts :

floatLevel of penalization of the ProxSlope penalization used for detection of outliers. Ideally, this should be equal to n_samples / noise_level, where noise_level is an estimated noise level

C :

float, default=1e3Level of penalization of the model weights

fdr :

float, default=0.05Target false discovery rate for the detection of outliers, namely for the detection of non-zero entries in

sample_interceptspenalty : {‘none’, ‘l1’, ‘l2’, ‘elasticnet’, ‘slope’}, default=’l2’

The penalization to use. Default ‘l2’, namely ridge penalization.

fit_intercept :

bool, default=TrueIf

True, include an intercept in the model, namely a global interceptrefit : ‘bool’, default=False

Not implemented yet

solver : ‘gd’, ‘agd’

The name of the solver to use. For now, only gradient descent and accelerated gradient descent are available

warm_start :

bool, default=FalseIf true, learning will start from the last reached solution

step :

float, default=NoneInitial step size used for learning.

tol :

float, default=1e-7The tolerance of the solver (iterations stop when the stopping criterion is below it).

max_iter :

int, default=100Maximum number of iterations of the solver

verbose :

bool, default=TrueIf

True, we verbose things, otherwise the solver does not print anything (but records information in history anyway)print_every :

int, default=10Print history information when

n_iter(iteration number) is a multiple ofprint_everyrecord_every :

int, default=10Record history information when

n_iter(iteration number) is a multiple ofrecord_every- Attributes

weights :

numpy.array, shape=(n_features,)The learned weights of the model (not including the intercept)

sample_intercepts :

numpy.array, shape=(n_samples,)Sample intercepts. This should be a sparse vector, since a non-zero entry means that the sample is an outlier.

intercept :

floatorNoneThe intercept, if

fit_intercept=True, otherwiseNonecoeffs :

numpy.array, shape=(n_features + n_samples + 1,)The full array of coefficients of the model. Namely, this is simply the concatenation of

weights,sample_interceptsandintercept

-

__init__(C_sample_intercepts, C=1000.0, fdr=0.05, penalty='l2', fit_intercept=True, refit=False, solver='agd', warm_start=False, step=None, tol=1e-07, max_iter=200, verbose=True, print_every=10, record_every=10, elastic_net_ratio=0.95, slope_fdr=0.05)¶

-

fit(X: object, y: numpy.array)¶ Fit the model according to the given training data.

- Parameters

X :

np.ndarrayorscipy.sparse.csr_matrix,, shape=(n_samples, n_features)Training vector, where n_samples in the number of samples and n_features is the number of features.

y :

np.array, shape=(n_samples,)Target vector relative to X.

- Returns

self : LearnerGLM

The fitted instance of the model

-

get_params()¶ Get parameters for this estimator.

- Returns

params :

dictParameter names mapped to their values.

-

predict(X)¶ Not available. This model is helpful to estimate and detect outliers. It cannot, for now, predict the label based on non-observed features.

-

score(X)¶ Not available. This model is helpful to estimate and detect outliers. Score computation makes no sense in this setting.

-

set_params(**kwargs)¶ Set the parameters for this learner.

- Parameters

**kwargs : :

Named arguments to update in the learner

- Returns

output :

LearnerRobustGLMself with updated parameters