tick.solver.GD¶

-

class

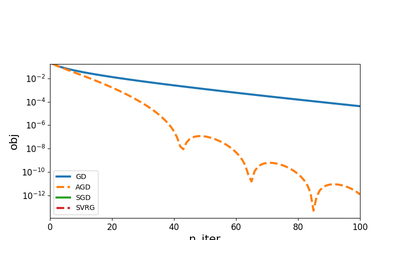

tick.solver.GD(step: float = None, tol: float = 0.0, max_iter: int = 100, linesearch: bool = True, linesearch_step_increase: float = 2.0, linesearch_step_decrease: float = 0.5, verbose: bool = True, print_every: int = 10, record_every: int = 1)[source]¶ Proximal gradient descent

For the minimization of objectives of the form

\[f(w) + g(w),\]where \(f\) has a smooth gradient and \(g\) is prox-capable. Function \(f\) corresponds to the

model.lossmethod of the model (passed withset_modelto the solver) and \(g\) corresponds to theprox.valuemethod of the prox (passed with theset_proxmethod). One iteration ofGDis as follows:\[w \gets \mathrm{prox}_{\eta g} \big(w - \eta \nabla f(w) \big),\]where \(\nabla f(w)\) is the gradient of \(f\) given by the

model.gradmethod and \(\mathrm{prox}_{\eta g}\) is given by theprox.callmethod. The step-size \(\eta\) can be tuned withstep. The iterations stop whenever tolerancetolis achieved, or aftermax_iteriterations. The obtained solution \(w\) is returned by thesolvemethod, and is also stored in thesolutionattribute of the solver.- Parameters

step :

float, default=NoneStep-size parameter, the most important parameter of the solver. Whenever possible, this can be automatically tuned as

step = 1 / model.get_lip_best(). Iflinesearch=True, this is the first step-size to be used in the linesearch (that should be taken as too large).tol :

float, default=1e-10The tolerance of the solver (iterations stop when the stopping criterion is below it)

max_iter :

int, default=100Maximum number of iterations of the solver.

linesearch :

bool, default=TrueIf

True, use backtracking linesearch to tune the step automatically.verbose :

bool, default=TrueIf

True, solver verboses history, otherwise nothing is displayed, but history is recorded anywayprint_every :

int, default=10Print history information every time the iteration number is a multiple of

print_every. Used only isverboseis Truerecord_every :

int, default=1Save history information every time the iteration number is a multiple of

record_everylinesearch_step_increase :

float, default=2.Factor of step increase when using linesearch

linesearch_step_decrease :

float, default=0.5Factor of step decrease when using linesearch

- Attributes

model :

ModelThe model used by the solver, passed with the

set_modelmethodprox :

ProxProximal operator used by the solver, passed with the

set_proxmethodsolution :

numpy.array, shape=(n_coeffs,)Minimizer found by the solver

history :

dict-likeA dict-type of object that contains history of the solver along iterations. It should be accessed using the

get_historymethodtime_start :

strStart date of the call to

solve()time_elapsed :

floatDuration of the call to

solve(), in secondstime_end :

strEnd date of the call to

solve()

References

A. Beck and M. Teboulle, A fast iterative shrinkage-thresholding algorithm for linear inverse problems, SIAM journal on imaging sciences, 2009

-

__init__(step: float = None, tol: float = 0.0, max_iter: int = 100, linesearch: bool = True, linesearch_step_increase: float = 2.0, linesearch_step_decrease: float = 0.5, verbose: bool = True, print_every: int = 10, record_every: int = 1)[source]¶ Initialize self. See help(type(self)) for accurate signature.

-

get_history(key=None)¶ Returns history of the solver

- Parameters

key :

str, default=NoneIf

Noneall history is returned as adictIf

str, name of the history element to retrieve

- Returns

output :

listordictIf

keyis None orkeyis not in history then output is a dict containing history of all keysIf

keyis the name of an element in the history, output is alistcontaining the history of this element

-

objective(coeffs, loss: float = None)¶ Compute the objective function

- Parameters

coeffs :

np.array, shape=(n_coeffs,)Point where the objective is computed

loss :

float, default=`None`Gives the value of the loss if already known (allows to avoid its computation in some cases)

- Returns

output :

floatValue of the objective at given

coeffs

-

set_model(model: tick.base_model.model.Model)¶ Set model in the solver

- Parameters

model :

ModelSets the model in the solver. The model gives the first order information about the model (loss, gradient, among other things)

- Returns

output :

SolverThe same instance with given model

-

set_prox(prox: tick.prox.base.prox.Prox)¶ Set proximal operator in the solver

- Parameters

prox :

ProxThe proximal operator of the penalization function

- Returns

output :

SolverThe solver with given prox

Notes

In some solvers,

set_modelmust be called beforeset_prox, otherwise and error might be raised

-

solve(x0=None, step=None)¶ Launch the solver

- Parameters

x0 :

np.array, shape=(n_coeffs,), default=`None`Starting point of the solver

step :

float, default=`None`Step-size or learning rate for the solver. This can be tuned also using the

stepattribute- Returns

output :

np.array, shape=(n_coeffs,)Obtained minimizer for the problem, same as

solutionattribute