tick.hawkes.HawkesSumGaussians¶

-

class

tick.hawkes.HawkesSumGaussians(max_mean_gaussian, n_gaussians=5, step_size=1e-07, C=1000.0, lasso_grouplasso_ratio=0.5, max_iter=50, tol=1e-05, n_threads=1, verbose=False, print_every=10, record_every=10, approx=0, em_max_iter=30, em_tol=None)[source]¶ A class that implements parametric inference for Hawkes processes with parametrisation of the kernels as sum of Gaussian basis functions and a mix of Lasso and group-lasso regularization

Hawkes processes are point processes defined by the intensity:

\[\forall i \in [1 \dots D], \quad \lambda_i(t) = \mu_i + \sum_{j=1}^D \sum_{t_k^j < t} \phi_{ij}(t - t_k^j)\]where

\(D\) is the number of nodes

\(\mu_i\) are the baseline intensities

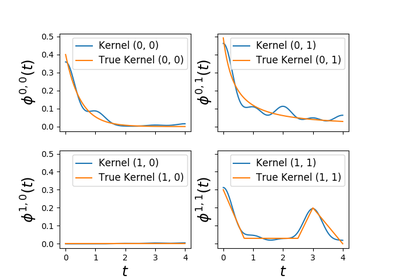

\(\phi_{ij}\) are the kernels

\(t_k^j\) are the timestamps of all events of node \(j\)

and with an parametrisation of the kernels as sum of Gaussian basis functions

\[\phi_{ij}(t) = \sum_{m=1}^M \alpha^{ij}_m f (t - t_m), \quad f(t) = (2 \pi \sigma^2)^{-1} \exp(- t^2 / (2 \sigma^2))\]In our implementation we denote:

Integer \(D\) by the attribute

n_nodesVector \(\mu \in \mathbb{R}^{D}\) by the attribute

baselineVector \((t_m) \in \mathbb{R}^{M}\) by the variable

means_gaussiansNumber \(\sigma\) by the variable

std_gaussianTensor \(A = (\alpha^{ij}_m)_{ijm} \in \mathbb{R}^{D \times D \times M}\) by the attribute

amplitudes

- Parameters

max_mean_gaussian :

floatThe mean of the last Gaussian basis function. This can be considered a proxy of the kernel support.

n_gaussians :

intThe number of Gaussian basis functions used to approximate each kernel.

step_size :

floatThe step-size used in the optimization for the EM algorithm.

C :

float, default=1e3Level of penalization

lasso_grouplasso_ratio :

float, default=0.5Ratio of Lasso-Nuclear regularization mixing parameter with 0 <= ratio <= 1.

For ratio = 0 this is Group-Lasso regularization

For ratio = 1 this is lasso (L1) regularization

For 0 < ratio < 1, the regularization is a linear combination of Lasso and Group-Lasso.

max_iter :

int, default=50Maximum number of iterations of the solving algorithm

tol :

float, default=1e-5The tolerance of the solving algorithm (iterations stop when the stopping criterion is below it). If not reached it does

max_iteriterationsn_threads :

int, default=1Number of threads used for parallel computation.

verbose :

bool, default=FalseIf

True, we verbose thingsif

int <= 0: the number of physical cores available on the CPUotherwise the desired number of threads

print_every :

int, default=10Print history information when

n_iter(iteration number) is a multiple ofprint_everyrecord_every :

int, default=10Record history information when

n_iter(iteration number) is a multiple ofrecord_every- Attributes

n_nodes :

intNumber of nodes / components in the Hawkes model

baseline :

np.array, shape=(n_nodes,)Inferred baseline of each component’s intensity

amplitudes :

np.ndarray, shape=(n_nodes, n_nodes, n_gaussians)Inferred adjacency matrix

means_gaussians :

np.array, shape=(n_gaussians,)The means of the Gaussian basis functions.

std_gaussian :

floatThe standard deviation of each Gaussian basis function.

References

Xu, Farajtabar, and Zha (2016, June) in ICML, Learning Granger Causality for Hawkes Processes.

-

__init__(max_mean_gaussian, n_gaussians=5, step_size=1e-07, C=1000.0, lasso_grouplasso_ratio=0.5, max_iter=50, tol=1e-05, n_threads=1, verbose=False, print_every=10, record_every=10, approx=0, em_max_iter=30, em_tol=None)[source]¶ Initialize self. See help(type(self)) for accurate signature.

-

fit(events, end_times=None, baseline_start=None, amplitudes_start=None)[source]¶ Fit the model according to the given training data.

- Parameters

events :

listoflistofnp.ndarrayList of Hawkes processes realizations. Each realization of the Hawkes process is a list of n_node for each component of the Hawkes. Namely

events[i][j]contains a one-dimensionalnumpy.arrayof the events’ timestamps of component j of realization i.If only one realization is given, it will be wrapped into a list

end_times :

np.ndarrayorfloat, default = NoneList of end time of all hawkes processes that will be given to the model. If None, it will be set to each realization’s latest time. If only one realization is provided, then a float can be given.

baseline_start :

Noneornp.ndarray, shape=(n_nodes)Set initial value of baseline parameter If

Nonestarts with uniform 1 valuesamplitudes_start :

Noneornp.ndarray, shape=(n_nodes, n_nodes, n_gaussians)Set initial value of amplitudes parameter If

Nonestarts with random values uniformly sampled between 0.5 and 0.9`

-

get_history(key=None)¶ Returns history of the solver

- Parameters

key :

str, default=NoneIf

Noneall history is returned as adictIf

str, name of the history element to retrieve

- Returns

output :

listordictIf

keyis None orkeyis not in history then output is a dict containing history of all keysIf

keyis the name of an element in the history, output is alistcontaining the history of this element

-

get_kernel_norms()[source]¶ Computes kernel norms. This makes our learner compliant with

tick.plot.plot_hawkes_kernel_normsAPI- Returns

norms :

np.ndarray, shape=(n_nodes, n_nodes)2d array in which each entry i, j corresponds to the norm of kernel i, j

-

get_kernel_supports()[source]¶ Computes kernel support. This makes our learner compliant with

tick.plot.plot_hawkes_kernelsAPI- Returns

output :

np.ndarray, shape=(n_nodes, n_nodes)2d array in which each entry i, j corresponds to the support of kernel i, j

-

get_kernel_values(i, j, abscissa_array)[source]¶ Computes value of the specified kernel on given time values. This makes our learner compliant with

tick.plot.plot_hawkes_kernelsAPI- Parameters

i :

intFirst index of the kernel

j :

intSecond index of the kernel

abscissa_array :

np.ndarray, shape=(n_points, )1d array containing all the times at which this kernel will computes it value

- Returns

output :

np.ndarray, shape=(n_points, )1d array containing the values of the specified kernels at the given times.

-

objective(coeffs, loss: float = None)[source]¶ Compute the objective minimized by the solver at

coeffs- Parameters

coeffs :

numpy.ndarray, shape=(n_coeffs,)The objective is computed at this point

loss :

float, default=`None`Gives the value of the loss if already known (allows to avoid its computation in some cases)

- Returns

output :

floatValue of the objective at given

coeffs