tick.linear_model.ModelLogReg¶

-

class

tick.linear_model.ModelLogReg(fit_intercept: bool = True, n_threads: int = 1)[source]¶ Logistic regression model for binary classification. This class gives first order information (gradient and loss) for this model and can be passed to any solver through the solver’s

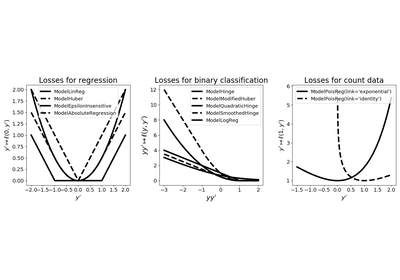

set_modelmethod.Given training data \((x_i, y_i) \in \mathbb R^d \times \{ -1, 1 \}\) for \(i=1, \ldots, n\), this model considers a goodness-of-fit

\[f(w, b) = \frac 1n \sum_{i=1}^n \ell(y_i, b + x_i^\top w),\]where \(w \in \mathbb R^d\) is a vector containing the model-weights, \(b \in \mathbb R\) is the intercept (used only whenever

fit_intercept=True) and \(\ell : \mathbb R^2 \rightarrow \mathbb R\) is the loss given by\[\ell(y, y') = \log(1 + \exp(-y y'))\]for \(y \in \{ -1, 1\}\) and \(y' \in \mathbb R\). Data is passed to this model through the

fit(X, y)method where X is the features matrix (dense or sparse) and y is the vector of labels.- Parameters

fit_intercept :

boolIf

True, the model uses an intercept- Attributes

features : {

numpy.ndarray,scipy.sparse.csr_matrix}, shape=(n_samples, n_features)The features matrix, either dense or sparse

labels :

numpy.ndarray, shape=(n_samples,) (read-only)The labels vector

n_samples :

int(read-only)Number of samples

n_features :

int(read-only)Number of features

n_coeffs :

int(read-only)Total number of coefficients of the model

dtype :

{'float64', 'float32'}Type of the data arrays used.

n_threads :

int, default=1 (read-only)Number of threads used for parallel computation.

if

int <= 0: the number of threads available on the CPUotherwise the desired number of threads

-

__init__(fit_intercept: bool = True, n_threads: int = 1)[source]¶ Initialize self. See help(type(self)) for accurate signature.

-

fit(features, labels)[source]¶ Set the data into the model object

- Parameters

features : {

numpy.ndarray,scipy.sparse.csr_matrix}, shape=(n_samples, n_features)The features matrix, either dense or sparse

labels :

numpy.ndarray, shape=(n_samples,)The labels vector

- Returns

output :

ModelLogRegThe current instance with given data

-

get_lip_best() → float¶ Returns the best Lipschitz constant, using all samples Warning: this might take some time, since it requires a SVD computation.

- Returns

output :

floatThe best Lipschitz constant

-

get_lip_max() → float¶ Returns the maximum Lipschitz constant of individual losses. This is particularly useful for step-size tuning of some solvers.

- Returns

output :

floatThe maximum Lipschitz constant

-

get_lip_mean() → float¶ Returns the average Lipschitz constant of individual losses. This is particularly useful for step-size tuning of some solvers.

- Returns

output :

floatThe average Lipschitz constant

-

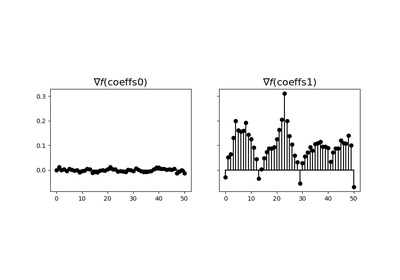

grad(coeffs: numpy.ndarray, out: numpy.ndarray = None) → numpy.ndarray¶ Computes the gradient of the model at

coeffs- Parameters

coeffs :

numpy.ndarrayVector where gradient is computed

out :

numpy.ndarrayorNoneIf

Nonea new vector containing the gradient is returned, otherwise, the result is saved inoutand returned- Returns

output :

numpy.ndarrayThe gradient of the model at

coeffs

Notes

The

fitmethod must be called to give data to the model, before usinggrad. An error is raised otherwise.

-

loss(coeffs: numpy.ndarray) → float¶ Computes the value of the goodness-of-fit at

coeffs- Parameters

coeffs :

numpy.ndarrayThe loss is computed at this point

- Returns

output :

floatThe value of the loss

Notes

The

fitmethod must be called to give data to the model, before usingloss. An error is raised otherwise.

-

loss_and_grad(coeffs: numpy.ndarray, out: numpy.ndarray = None) → tuple¶ Computes the value and the gradient of the function at

coeffs- Parameters

coeffs :

numpy.ndarrayVector where the loss and gradient are computed

out :

numpy.ndarrayorNoneIf

Nonea new vector containing the gradient is returned, otherwise, the result is saved inoutand returned- Returns

loss :

floatThe value of the loss

grad :

numpy.ndarrayThe gradient of the model at

coeffs

Notes

The

fitmethod must be called to give data to the model, before usingloss_and_grad. An error is raised otherwise.